OSoMe Awesome Speakers

OSoMe Awesome Speakers is a virtual event that aims to provide a platform for leading scholars and researchers to share their work and insights about social media manipulation and misinformation. The series will bring together experts from various fields to discuss a range of topics related to information integrity. The series is an excellent opportunity for academics, journalists, and industry professionals to learn about the latest developments in social media research and engage in insightful discussions with experts. (Note: "OSoMe Awesome" is pronounced "awesome awesome.")

2025-2026

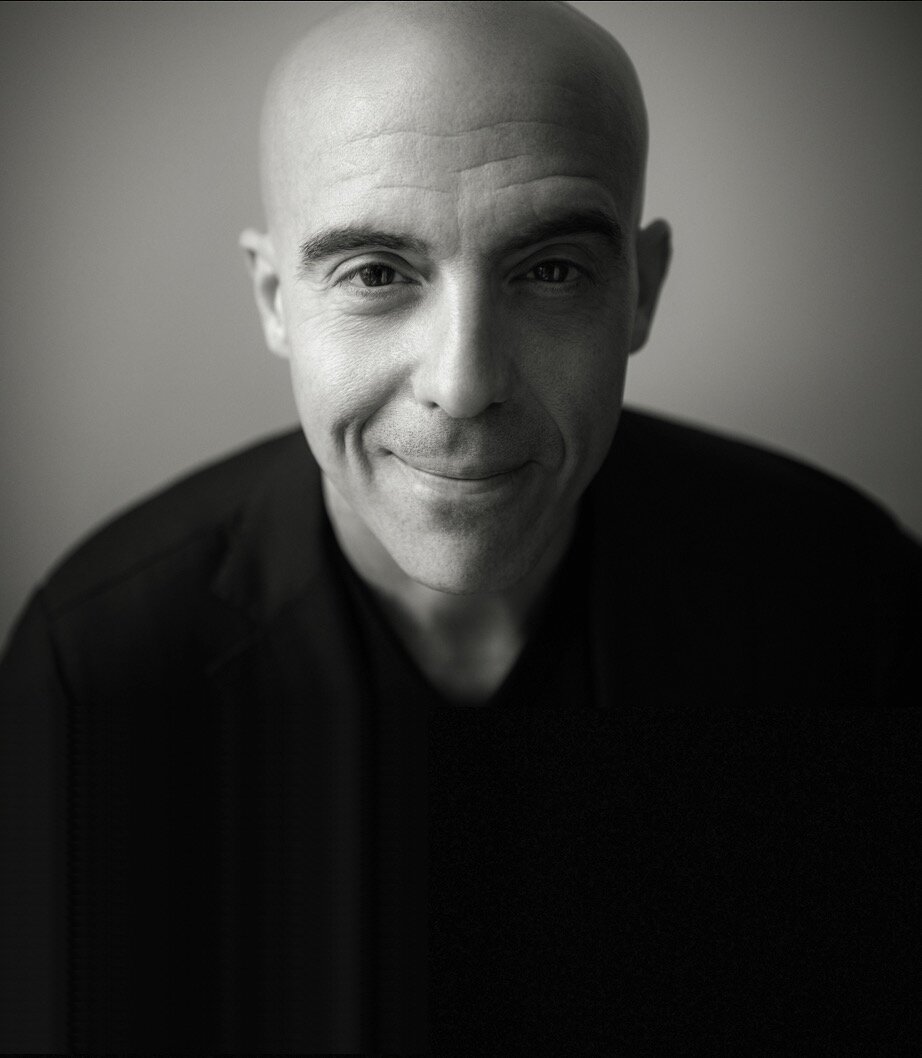

Patrick Warren

Clemson University

Professor

TALK TITLE: Measuring the Impact of a Large State-Sponsored Narrative-Laundering Campaign: The case of the Storm-1516 attack on Vladimir Zelensky

ABSTRACT: We investigate the impact of a Large State-Sponsored Narrative-Laundering Campaign that targeted the reputation of Vladmir Zelensky throughout much of 2023 and 2024. This campaign included the introduction, laundering, and amplification of many false narratives that related to corruption by Zelensky or his family over this period. By tracking 10 specific narrative-specific sets of keywords, we show that discussions of these false narratives comprised a substantial share of the total discourse on X pertaining to Zelensky in the weeks after their introduction. Only a small share of this discourse involved fact-checking those narratives. That shift also led to a substantial increase in the share of the discourse pertaining to Zelensky that included implications or accusations of corruption, in general. Quantitatively, the share of the discourse including implications of corruption increased by about 8 percentage points in the week after the narrative was introduced, on a base rate of about 10 percent, although the increase disappears by the next week. This pattern also appears in Google search trends, but persists even longer in that setting, with searches involving the false-corruption narratives increasing (from a base of 0) to 6 percent of total Zelensky-related searches in the week they are introduced, to 3 percent in the following week, and to 1 percent in the third week after introduction. These patterns suggest that this sort of narrative-laundering operation can have a substantial impact in organic online discourse and search behavior.

BIO: Patrick Warren is a professor of economics and co-director of the Media Forensics Hub at Clemson University. Before coming to Clemson, he studied at MIT, earning a Ph.D. in economics (2008), and an undergraduate degree from the South Carolina Honors College (BArSc, 2001). His research investigates how organizations interact with the information environment: for-profit and non-profit firms, bureaucracies, political parties and even armies. He has written numerous peer-reviewed articles in top economics, political science, communication, and law journals. He has also served on the board of the Society for Institutional and Organizational Economics, been a visiting associate professor at Northwestern University and a visiting scholar at the RAND Corporation.

Petter Törnberg

University of Amsterdam

Assistant Professor

TALK TITLE: Studying Digital Media in the Post-Social Media Era

ABSTRACT: For two decades, “social media” has been the master lens through which scholars have understood digital communication – an era defined by user-generated content, networked publics, and participatory culture. Yet that era is drawing to a close. Platforms are in decline as users flee polarization, misinformation, commercial clutter, and algorithmic noise for private chats and closed networks. Into this vacuum steps generative AI, as platforms replace disappearing users with AI-generated interaction, filling feeds with synthetic voices and auto-produced media. What once promised conversation now resembles a new form of broadcast media – engineered with algorithmic precision for maximal attention extraction. How, then, do we study digital media in the post-social media era? This talk outlines a research agenda for a post-social media studies: a call to develop new concepts, methods, and critical vocabularies for a landscape where publics are re-assembled by algorithms, communication becomes synthetic, and our attention economy is redefined by systems that no longer need our participation — only our gaze.

BIO: Petter Törnberg studies the intersection of AI, social media, and politics at the University of Amsterdam. His recent books include “Intimate Communities of Hate: Why Social Media Fuels Far-Right Extremism” (with Anton Törnberg; 2024) and “Seeing Like a Platform: An inquiry into the condition of digital modernity” (with Justus Uitermark; 2025.)

Michelle A. Amazeen

Boston University

Associate Dean of Research and Associate Professor

TALK TITLE: Content Confusion: Media Transparency and Misinformation in the Digital Age

ABSTRACT: While social media is often blamed for the spread of disinformation, mainstream news media also plays a significant role. In Content Confusion, Michelle Amazeen explores how news outlets use native advertising—paid content disguised as editorial news—and how this practice influences both audience perceptions and the substance of adjacent journalism. This talk will focus on the amplification of native ads via social media, where mandated disclosures often vanish, causing sponsored content to appear as independent journalism. As a result, social media users may inadvertently share this content, further blurring the lines between advertising and news and challenging the public’s ability to stay accurately informed.

BIO: Dr. Michelle A. Amazeen is Associate Dean of Research and Associate Professor of Mass Communication in the College of Communication at Boston University and directs the Communication Research Center. Dr. Amazeen examines persuasion and misinformation, exploring the nature and persuasive effects of misinformation and efforts to correct it. She employs a variety of qualitative and quantitative methods to yield results with practical applications for journalists, educators, policymakers, and consumers who strive to foster recognition of and resistance to persuasion and misinformation in media. Her work has appeared in publications such as Communication Monographs, Digital Journalism, Journalism & Mass Communication Quarterly, Human Communication Research, New Media & Society, and Science Communication. She is one of 22 prominent scholars from around the globe with expertise in misinformation and its debunking who contributed to The Debunking Handbook 2020. She is currently a co-investigator on the Boston University Climate Disinformation Initiative, with a focus on climate issues in native advertising. Her related book, Content Confusion: News Media, Native Advertising, and Policy in an Era of Disinformation (MIT Press) will be published in November 2025.

James Evans

University of Chicago

Max Palevsky Professor

TALK TITLE: Information Laundering: How Misinformation Gets Cleaned and Dirty Across Digital and Policy [This talk will be in a hybrid format and will be held in room 2005 of the Luddy AI building.]

ABSTRACT: Information integrity is threatened by distortion at multiple scales—from individual social media interactions to institutional policy-making. This session presents two complementary studies examining how misinformation circulates and gains legitimacy through distinct mechanisms. First, we analyze how scientific findings are misinterpreted as they travel from peer-reviewed journals into U.S. policy documents. Using language models to assess over 1.2 million citations across three presidential administrations, we find that ideological think tanks are nearly twice as likely to misinterpret science as governments or intergovernmental organizations, with distortions strategically aligned with policy positions and disproportionately targeting high-impact journals. Government documents are significantly more likely to cite think tank reports that misinterpret science, revealing the structure of "information laundering"—strategic diffusion of distorted evidence through chains of indirect citation that embed misrepresented findings into policy discourse. Second, we examine the downstream consequences of misinformation correction on social media. Analyzing over 700,000 tweets from users tagged for spreading misinformation, we find that individual fact-checking causes users to retreat into information bubbles, reducing both political and content diversity. However, collective verification systems like Twitter's Community Notes mitigate these effects through peer-reviewed, less toxic, and more deliberative corrections. Together, these studies illuminate a troubling asymmetry: institutions designed to synthesize knowledge for collective action often amplify distortion, while crowd-based systems designed to correct misinformation can inadvertently narrow the information ecosystem. We discuss implications for designing information environments that promote both accuracy and epistemic openness.

BIO: James Evans is the Max Palevsky Professor of Sociology, Director of Knowledge Lab and Founding Faculty Co-Director of Chicago Center Computational Social Science at the University of Chicago, the Santa Fe Institute, and Google. Evans' research uses large-scale data, machine learning and generative models to understand how collectives of humans and machines think and what they (can) know. This involves inquiry into the emergence of ideas, shared patterns of reasoning, and processes of attention, communication, agreement, and certainty. Thinking and knowing collectives like science, the Web, and civilization as a whole involve complex networks of diverse human and machine intelligences, collaborating and competing to achieve overlapping aims. Evans' work connects the interaction of these agents with the knowledge they produce and its value for themselves and the system. His work is supported by numerous federal agencies (NSF, NIH, DOD), foundations and philanthropies, has been published in Nature, Science, PNAS, and top social and computer science outlets, and has been covered by global news outlets from the Economist, the Atlantic, and the New York Times to Le Monde, El Pais, and Die Zeit.

2024-2025

.jpg)

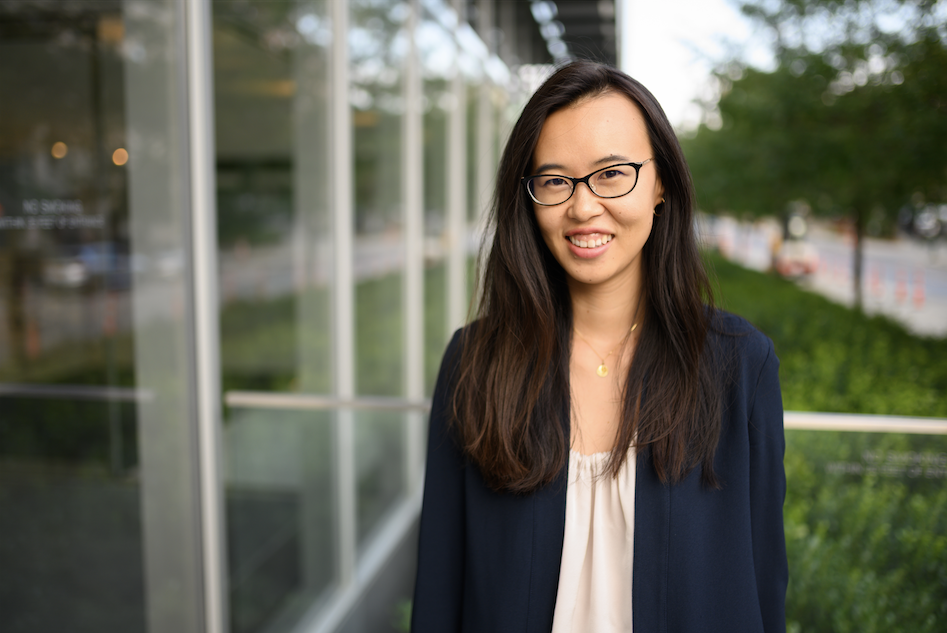

Josephine ("Jo") Lukito

University of Texas at Austin

Assistant Professor

TALK TITLE: Challenges and Solutions for Multi-Platform Studies of Election Discourse

ABSTRACT: Over the past two decades, U.S. political candidates have relied increasingly on a cornucopia of social media platforms to communicate with their constituents and voters. This presentation will cover ongoing work from the Media and Democracy Data Cooperative to collect and study this communication in the 2022 U.S. midterm elections (with eight platforms) and the 2024 U.S. presidential and congressional elections (with 9-11 platforms). Results of the 2022 U.S. midterm election show that differences between candidates may be driven more by platform affordances rather than partisan differences. Preliminary results of the 2024 U.S. elections suggest that not only will data collection be more challenging, but candidates are expanding their repertoire of platforms as the media ecology becomes more image and video-driven.

BIO: Josephine ("Jo") Lukito (she/her) is an Assistant Professor at the University of Texas at Austin’s School of Journalism and Media and the Director of the Media & Democracy Data Cooperative. Jo’s work uses computational and machine learning approaches to study political language, with a focus on harmful digital content and multi-platform discursive flows. She also studies data access for researchers and journalists. Her work has been published in top-tiered journals such as Political Communication and Social Media + Society, and featured in Wired, Columbia Journalism Review, and Reuters.

Kristina Lerman

University of Southern California

Principal Scientist

TALK TITLE: Collective Psychology of Social Media: Emotions, Conflict, and Mental Health in the Digital Age

ABSTRACT: Social media has linked people on a global scale, rapidly transforming how we communicate and share information, emotions, and feelings. This massive interconnectedness created new vulnerabilities in the form of societal division, growing mistrust and deteriorating mental health. Using advanced tools to recognize emotions in online discussions at scale, I describe new insights into the collective psychology of social media. My talk focuses on three case studies: affective polarization, intergroup conflict and psychological contagion. Affective polarization refers to the emotional dimensions of political polarization, where different groups not only disagree but also dislike and distrust each other. I show that “in-group love, out-group hate” that characterize affective polarization also exists in online interactions. I introduce a model of opinion dynamics in affectively polarized systems and show that explains how out-group hate amplifies groupthink, and why exposure to out-group’s beliefs, long thought to be the solution to reducing polarization, often backfires. Next, I analyze the language of “othering” and show how fear speech and hate speech work in tandem to fuel intergroup conflict and justify violence. Finally, turning to mental health, I show that online eating disorders communities grow through mechanisms similar to online radicalization. Using generative AI we peer into the collective mindsets of online communities to identify unhealthy cognitions that would be missed by traditional moderation. This research provides new insights into the complex social and emotional dynamics of political discourse and mental health in the digital age.

BIO: Kristina Lerman is a Principal Scientist at the University of Southern California Information Sciences Institute and holds a joint appointment as a Research Professor in the USC Computer Science Department. Trained as a physicist, she now applies network analysis and machine learning to problems in computational social science, including crowdsourcing, social network and social media analysis. Her work on modeling and understanding cognitive biases in social networks has been covered by the Washington Post, Wall Street Journal, and MIT Tech Review. She is a fellow of the AAAI.

Jeremy Blackburn

Binghamton University

Associate Professor

TALK TITLE: Understanding the Prominence of Alternative Social Media Platforms

ABSTRACT: While social media and the Web have become societally ubiquitous, not all of their impact has been positive. The geographic and temporal barriers that social media has removed from our ability to share ideas and information also enabled fringe ideologies and communities to grow in unprecedented fashion. In this talk, I will explore how fringe online communities have evolved from isolated groups on mainstream social media platforms to quickly building out their own, alternative social media platforms. I will demonstrate that even though these new platforms are relatively small and not as featureful as the mainstream platforms they emulate, they provide an environment that allows not just the proliferation, but sustainability of fringe communities. In particular, I will discuss how approximate technical equivalents to multi-modal mainstream social media platforms have become enclaves for content creators that have been banned from the mainstream platforms they were born in, and have clearly distinguishable differences in terms of content. I will also discuss how fringe platforms, while enabling free and open discussion, also harbor dangerous communities that espouse harmful, and sometimes violent, rhetoric.

BIO: Jeremy Blackburn joined the Department of Computer Science at Binghamton University in fall 2019. Jeremy is broadly interested in data science, with a focus on large-scale measurements and modeling. His largest line of work is in understanding jerks on the Internet. His research into understanding toxic behavior, hate speech, and fringe and extremist Web communities has been covered in the press by The Washington Post, the New York Times, The Atlantic, The Wall Street Journal, the BBC and New Scientist, among others.

Deen Freelon

University of Pennsylvania

Presidential Professor

TALK TITLE: Computational Research in the Post-API Age

ABSTRACT: In 2018 I published a short essay titled “Computational Research in the Post-API Age” in the journal Political Communication (Freelon, 2018). Its goals were simple: to warn computational researchers in the social sciences that the days of free and easily-accessible digital communication data were coming to an end, and to start a conversation about how to respond. The essay was initially inspired by the prohibition of automated data collection from Facebook’s Graph API, which occurred in March 2018 in the wake of the Cambridge Analytica scandal. This corporate decision effectively eliminated most authorized means of collecting Facebook data (except those involving direct collaboration with Meta researchers) until the Crowdtangle service was opened to academic researchers in the summer of 2020. Meta shelved Crowdtangle in August 2024, replacing it with yet another data access regime, the Meta Content Library. Other social platforms, including Twitter/X, Reddit, and TikTok, have also substantially modified their data access policies over the years. Some of the issues I raised in my Political Communication essay are still relevant today, while others are less so. I failed entirely to address still other major developments in access to digital data over the ensuing years. This presentation, which is based on a commissioned journal article, will build on my earlier piece in three ways: first, it will recount a concise history of social media data access informed by official documentation and my own professional observations as one of the first Communication researchers to analyze social media data computationally. Second, it will sketch the present state of digital communication data access in context with past such “ages,” making practical recommendations and generally characterizing the moment for posterity. The third section will be devoted to the future, but instead of making predictions, it will adopt a normative approach, advocating for corporate and governmental data access policies that balance the researcher’s interest in data usability, the public’s interests in privacy and impactful research, and business interests in transparency and good corporate citizenship.

BIO: Deen Freelon is a Presidential Professor at the Annenberg School for Communication. A widely recognized expert on digital politics and computational social science, he has authored or coauthored over 60 book chapters, funded reports, and articles in journals such as Nature, Science, and the Proceedings of the National Academy of Sciences. He was one of the first communication researchers to apply computational methods to social media data and has developed eight open-source research software packages. The first of these, ReCal, is a free online intercoder reliability service that has been running continuously since 2008 (when he was a Ph.D. student) and used by tens of thousands of researchers worldwide. He has been awarded over $6 million in research funding from grantmakers including the Knight Foundation, the Hewlett Foundation, the Spencer Foundation, and the US Institute for Peace. He was a founding member and remains Senior Researcher at the Center for Information, Technology, and Public Life at the University of North Carolina at Chapel Hill, one of five academic research centers in the Knight Research Network (est. 2019) to receive its highest level of funding. His research and commentary have been featured in press outlets including the Washington Post, NPR, The Atlantic, Buzzfeed, Vox, USA Today, the BBC, PBS NewsHour, CBS News, NBC News, and many others. Unlike many computational social scientists, he centers questions of identity and power in his work, paying particular attention to race, gender, and ideology.

Amy Zhang

University of Washington

Assistant Professor

TALK TITLE: Platform Designs and Tools to Support Users in Addressing Misinformation on Social Media

ABSTRACT: What role should everyday users play in addressing misinformation on social media? This question became newly urgent after Meta's recent decision to cancel its fact-checking program in favor of crowdsourced "Community Notes." But simply placing the onus on users without thoughtful consideration or support is setting them up to fail—indeed, current platform designs may actively undermine users' efforts. In this talk, I’ll present our research on building user-facing tools and novel platform designs that empower everyday users to improve the quality of information on social media and be more resilient and effective in their work. Specifically, I’ll discuss how to better support four key roles for users and communities: 1) as viewers who can more easily spot misinformation, 2) as would-be posters and sharers who consider the accuracy of what they share, 3) as active responders addressing misinformation in their communities, and 4) as contributors to large-scale crowdsourced initiatives. By combining collective and machine intelligence, I argue that users can play a more meaningful role—alongside efforts led by platforms and other stakeholders—towards a stronger and more collaborative defense against misinformation.

BIO: Amy X. Zhang is an assistant professor at University of Washington's Allen School of Computer Science and Engineering. Previously, she was a 2019-20 postdoctoral researcher at Stanford University's Computer Science Department after completing her Ph.D. at MIT CSAIL in 2019, where she received the George Sprowls Best Ph.D. Thesis Award at MIT in computer science. During her Ph.D., she was an affiliate and 2018-19 Fellow at the Berkman Klein Center at Harvard University, a Google Ph.D. Fellow, and an NSF Graduate Research Fellow. Her work has received a best paper award at ACM CSCW, a best paper honorable mention award at ACM CHI, and has been profiled on BBC's Click television program, CBC radio, and featured in articles by ABC News, The Verge, New Scientist, and Poynter. She is a founding member of the Credibility Coalition, a group dedicated to research and standards for information credibility online. She has interned at Microsoft Research and Google Research. She received an M.Phil. in Computer Science at the University of Cambridge on a Gates Fellowship and a B.S. in Computer Science at Rutgers University, where she was captain of the Division I Women's tennis team.

2023-2024

Joshua Tucker

New York University

Professor of Politics, affiliated Professor of Russian and Slavic Studies, and affiliated Professor of Data Science

TALK TITLE: Exposure to the Russian Internet Research Agency foreign influence campaign on Twitter in the 2016 US election and its relationship to attitudes and voting behavior

ABSTRACT: There is widespread concern that foreign actors are using social media to interfere in elections worldwide. Yet data have been unavailable to investigate links between exposure to foreign influence campaigns and political behavior. Using longitudinal survey data from US respondents linked to their Twitter feeds, we quantify the relationship between exposure to the Russian foreign influence campaign and attitudes and voting behavior in the 2016 US election. We demonstrate, first, that exposure to Russian disinformation accounts was heavily concentrated: only 1% of users accounted for 70% of exposures. Second, exposure was concentrated among users who strongly identified as Republicans. Third, exposure to the Russian influence campaign was eclipsed by content from domestic news media and politicians. Finally, we find no evidence of a meaningful relationship between exposure to the Russian foreign influence campaign and changes in attitudes, polarization, or voting behavior. The results have implications for understanding the limits of election interference campaigns on social media.

BIO: Joshua A. Tucker is Professor of Politics, affiliated Professor of Russian and Slavic Studies, and affiliated Professor of Data Science at New York University. He is the Director of NYU’s Jordan Center for Advanced Study of Russia and co-Director of the NYU Center for Social Media and Politics; he also served as a co-author/editor of the award-winning politics and policy blog The Monkey Cage at The Washington Post for over a decade. His research focuses on the intersection of social media and politics, including partisan echo chambers, online hate speech, the effects of exposure to social media on political knowledge, online networks and protest, disinformation and fake news, how authoritarian regimes respond to online opposition, and Russian bots and trolls. He is the co-Chair of the external academic team for the U.S. 2020 Facebook & Instagram Election Study, serves on the advisory board of the American National Election Study, the Comparative Study of Electoral Systems, and numerous academic journals, and was the co-founder and co-editor of the Journal of Experimental Political Science. An internationally recognized scholar, he has been a keynote speaker for conferences in Sweden, Denmark, Italy, Brazil, the Netherlands, Russia, and the United States, and has given over 200 invited research presentations at top domestic and international universities and research centers. His most recent books are the co-authored Communism’s Shadow: Historical Legacies and Contemporary Political Attitudes (Princeton University Press, 2017), and the co-edited Social Media and Democracy: The State of the Field (Cambridge University Press, 2020).

Gianluca Stringhini

Boston University

Associate Professor, Electrical and Computer Engineering Department

TALK TITLE: Computational Methods to Measure and Mitigate Online Disinformation

ABSTRACT: The Web has allowed disinformation to reach an unprecedented scale, allowing it to become ubiquitous and harm society in multiple ways. To be able to fully understand this phenomenon, we need computational tools able to trace false information, monitoring a plethora of online platforms and analyzing not only textual content but also images and videos. In this talk, I will present my group's efforts in developing tools to automatically monitor and model online disinformation. These tools allow us to recommend social media posts that should receive soft moderation, to identify false and misleading images posted online, and to detect inauthentic social network accounts that are likely involved in state-sponsored influence campaigns. I will then discuss our research on understanding the potentially unwanted consequences of suspending misbehaving users on social media.

BIO: Gianluca Stringhini is an Associate Professor in the Electrical and Computer Engineering Department at Boston University, holding affiliate appointments in the Computer Science Department, in the Faculty of Computing and Data Sciences, in the BU Center for Antiracist Research, and in the Center for Emerging Infectious Diseases Policy & Research. In his research Gianluca applies a data-driven approach to better understand malicious activity on the Internet. Through the collection and analysis of large-scale datasets, he develops novel and robust mitigation techniques to make the Internet a safer place. His research involves a mix of quantitative analysis, (some) qualitative analysis, machine learning, crime science, and systems design. Over the years, Gianluca has worked on understanding and mitigating malicious activities like malware, online fraud, influence operations, and coordinated online harassment. He received multiple prizes including an NSF CAREER Award in 2020, and his research won multiple Best Paper Awards. Gianluca has published over 100 peer reviewed papers including several in top computer security conferences like IEEE Security and Privacy, CCS, NDSS, and USENIX Security, as well as top measurement, HCI, and Web conferences such as IMC, ICWSM, CHI, CSCW, and WWW.

Luca Luceri

University of Southern California

Research Scientist

TALK TITLE: AI-Driven Approaches for Countering Influence Campaigns in Socio-Technical Systems

ABSTRACT: The proliferation of online platforms and social media has sparked a surge in information operations designed to manipulate public opinion on a massive scale, posing significant harm at both the individual and societal levels. In this talk, I will outline a research agenda focused on identifying, investigating, and mitigating orchestrated influence campaigns and deceptive activities within socio-technical systems. I will start by detailing my research efforts in designing AI-based approaches for detecting state-backed troll accounts on social media. Modeling human decision-making as a Markov Decision Process and using an Inverse Reinforcement Learning framework, I will illustrate how we can extract the incentives that social media users respond to and differentiate genuine users from state-sponsored operators. Next, I will delve into a set of innovative approaches I developed to uncover signals of inauthentic, coordinated behaviors. By combining embedding techniques to unveil unexpected similarities in the activity patterns of social media users, along with graph decomposition methods, I will show how we can reveal network structures that pinpoint coordinated groups orchestrating information operations. Through these approaches, I will provide actionable insights to inform regulators in shaping strategies to tame harm, discussing challenges and opportunities to improve the resilience of the information ecosystem, including the potential for interdisciplinary collaborations to address these complex issues.

This is a hybrid event, co-hosted by the Center for Complex Networks and Systems Research. The talk will be held in room 2005 of the Luddy AI building. Please indicate if you will be attending in-person as food will be provided.

BIO: Luca Luceri is a Research Scientist at the Information Sciences Institute (ISI) at the University of Southern California (USC). His research incorporates machine learning, data and network science, with a primary focus on detecting and mitigating online harms in socio-technical systems. He investigates deceptive and malicious behaviors on social media, with a particular emphasis on problems such as social media manipulation, (mis-)information campaigns, and Internet-mediated radicalization processes. His research advances AI/ML/NLP for social good, computational social science, and human-machine interaction. In his role as a Research Scientist at ISI, Luca Luceri serves as a co-PI of the DARPA-funded program INCAS, aiming to develop techniques to detect, characterize, and track geopolitical influence campaigns. Additionally, he is the co-PI of a Swiss NSF-sponsored project called CARISMA, which develops network models to simulate the effects of moderation policies to combat online harms.

Franziska Roesner

University of Washington

Associate Professor, Paul G. Allen School of Computer Science & Engineering

TALK TITLE: Supporting Effective Peer Response to Misinformation on Social Media

ABSTRACT: Online information is difficult to navigate. While large organizations and social media platforms have taken steps to amplify accurate information and combat rumors and misinformation online, much work to offer correct information and quality resources happens at a more local scale, with individuals and community moderators engaging with (mis)information posted by their peers. In this talk, I will discuss the role of peer-based misinformation response, drawing on results from qualitative and quantitative studies of people’s engagement with potential misinformation online, and sharing our vision and preliminary learnings developing a software tool (the “ARTT Guide”) that supports constructive online conversations and peer-based misinformation response.

BIO: Franziska (Franzi) Roesner is an Associate Professor in the Paul G. Allen School of Computer Science & Engineering at the University of Washington, where she co-directs the Security and Privacy Research Lab. Her research focuses broadly on computer security and privacy for end users of existing and emerging technologies. Her work has studied topics including online tracking and advertising, security and privacy for marginalized and vulnerable user groups, security and privacy in emerging augmented reality (AR) and IoT platforms, and online mis/disinformation. She is the recipient of a Google Security and Privacy Research Award and a Google Research Scholar Award, a Consumer Reports Digital Lab Fellowship, an MIT Technology Review ”Innovators Under 35” Award, an Emerging Leader Alumni Award from the University of Texas at Austin, and an NSF CAREER Award. She serves on the USENIX Security and USENIX Enigma Steering Committees (having previously co-chaired both conferences). More information at https://www.franziroesner.com.

Brendan Nyhan

Dartmouth College

James O. Freedman Presidential Professor, Department of Government

TALK TITLE: Subscriptions and external links help drive resentful users to alternative and extremist YouTube videos

ABSTRACT: Do online platforms facilitate the consumption of potentially harmful content? Using paired behavioral and survey data provided by participants recruited from a representative sample in 2020 (n=1,181), we show that exposure to alternative and extremist channel videos on YouTube is heavily concentrated among a small group of people with high prior levels of gender and racial resentment. These viewers often subscribe to these channels (prompting recommendations to their videos) and follow external links to them. In contrast, non-subscribers rarely see or follow recommendations to videos from these channels. Our findings suggest YouTube's algorithms were not sending people down "rabbit holes" during our observation window in 2020, possibly due to changes that the company made to its recommender system in 2019. However, the platform continues to play a key role in facilitating exposure to content from alternative and extremist channels among dedicated audiences.

BIO: Brendan Nyhan is the James O. Freedman Presidential Professor of Government at Dartmouth College. His research, which focuses on misperceptions about politics and health care, has been published in journals including the American Journal of Political Science, Journal of Politics, Nature Human Behaviour, Proceedings of the National Academy of Sciences, Pediatrics, and Vaccine. Nyhan has been named a Guggenheim Fellow by the Guggenheim Foundation, an Andrew Carnegie Fellow by the Carnegie Corporation of New York, and a Belfer Fellow by the Anti-Defamation League. He is also a co-founder of Bright Line Watch, a non-partisan group monitoring the state of American democracy; a contributor to The Upshot at The New York Times; and co-author of All the President's Spin, a New York Times bestseller that Amazon named one of the best political books of 2004. Nyhan received his Ph.D. from the Department of Political Science at Duke University and previously served as a Robert Wood Johnson Scholar in Health Policy Research and Professor of Public Policy at the Ford School of Public Policy at the University of Michigan.

Ceren Budak

University of Michigan

Associate Professor, School of Information

TALK TITLE: The Dynamics of Unfollowing Misinformation Spreaders

ABSTRACT: Many studies explore how people "come into" misinformation exposure. But much less is known about how people "come out of" misinformation exposure. Do people organically sever ties to misinformation spreaders? And what predicts doing so? We will discuss this topic in this talk, describing the following work. Over six months, we tracked the frequency and predictors of 1M followers unfollowing health misinformation spreaders on Twitter. We found that misinformation ties are persistent. The fact that users rarely unfollow misinformation spreaders suggests a need for external nudges and the importance of preventing exposure from arising in the first place. Although generally infrequent, the factors most associated with unfollowing misinformation spreaders are redundancy and ideology.

BIO: Ceren Budak is an Associate Professor at the School of Information at the University of Michigan. She utilizes network science, machine learning, and crowdsourcing methods and draws from scientific knowledge across multiple social science communities to contribute computational methods to the field of political communication. Her recent scholarship is focused on topics related to news production and consumption, election campaigns, and online social movements.

Renée DiResta

Stanford Internet Observatory

Technical Research Manager

TALK TITLE: Generative AI and the Challenge of Unreality

ABSTRACT: Generative AI technology has reshaped the landscape of disinformation and influence operations, though the effect of unreality extends far beyond these domains. Just as social media altered the capacity for distribution, generative AI has transformed the capacity for creation. This talk explores how the ability to create virtually anything has significant implications for trust and safety on digital platforms, particularly in the realm of child safety (CSAM). It emphasizes that these changes are not mere hypotheticals but are already shaping our online environment, and discusses the ways that platform trust and safety teams, as well as policymakers, must grapple with these new challenges, as we all adapt to a world in which unreality becomes the norm.

BIO: Renée DiResta is the Technical Research Manager at the Stanford Internet Observatory, a cross-disciplinary program of research, teaching and policy engagement for the study of abuse in current information technologies. Renee’s work examines the spread of narratives across social and media networks, how distinct actor types leverage the information ecosystem to exert influence, and how policy, education, and design responses can be used to mitigate manipulative dynamics. Renée has advised Congress, the State Department, and other academic, civic, and business organizations. At the behest of SSCI, she led outside teams investigating both the Russia-linked Internet Research Agency’s multi-year effort to manipulate American society and elections, and the GRU influence campaign deployed alongside its hack-and-leak operations in the 2016 election. Renée is an Ideas contributor at Wired and The Atlantic, an Emerson Fellow, a 2019 Truman National Security Project fellow, a 2019 Mozilla Fellow in Media, Misinformation, and Trust, a 2017 Presidential Leadership Scholar, and a Council on Foreign Relations term member.

David Lazer

Northeastern University

University Distinguished Professor

TALK TITLE: The Emergent Structure of the Online Information Ecosystem

ABSTRACT: The first part of this presentation examines the emergent and sometimes paradoxical logic of the internet news ecosystem, in particular: (1) collectively, news diets have become far more concentrated in a small number of outlets; (2) however, individuals have relatively diverse news diets—almost certainly far more diverse than was plausible pre-Internet (as measured by number of unique content producers); (3) the social-algorithmic curation system of the Internet tends to point people to content with their preferences, sometimes in unlikely places. The greater diversity of consumption of news measured by number of unique outlets may not actually result in diversity of content. The second part of the presentation will discuss the development of the National Internet Observatory a large, NSF-supported effort to create a privacy-preserving data collection/data analytic system for the broader research community.

BIO: David Lazer is University Distinguished Professor of Political Science and Computer Sciences, Northeastern University. Prior to coming to Northeastern University, he was on the faculty at the Harvard Kennedy School (1998-2009). In 2019, he was elected a fellow to the National Academy of Public Administration. He has published prominent work on misinformation, democratic deliberation, collective intelligence, computational social science, and algorithmic auditing, across a wide range of prominent journals such as Science, Nature, Proceedings of the National Academy of Science, the American Political Science Review, Organization Science, and the Administrative Science Quarterly. His research has received extensive coverage in the media, including the New York Times, NPR, the Washington Post, the Wall Street Journal, and CBS Evening News. He is a co-leader of the COVID States Project, one of the leading efforts to understand the social and political dimensions of the pandemic in the United States. He has been a PI on more than $30m of grants. Dr. Lazer has served in multiple leadership and editorial positions, including as a board member for the International Society for Computational Social Science (ISCSS), the International Network of Social Network Analysts (INSNA), reviewing editor for Science, associate editor of Social Networks and Network Science, numerous other editorial boards and program committees.

David Broniatowski

George Washington University

Associate Professor

TALK TITLE: The Efficacy of Facebook's Vaccine Misinformation Policies and Architecture During the COVID-19 Pandemic

ABSTRACT: Online misinformation promotes distrust in science, undermines public health, and may drive civil unrest. During the coronavirus disease 2019 pandemic, Facebook—the world’s largest social media company—began to remove vaccine misinformation as a matter of policy. We evaluated the efficacy of these policies using a comparative interrupted time-series design. We found that Facebook removed some antivaccine content, but we did not observe decreases in overall engagement with antivaccine content. Provaccine content was also removed, and antivaccine content became more misinformative, more politically polarized, and more likely to be seen in users’ newsfeeds. We explain these findings as a consequence of Facebook’s system architecture, which provides substantial flexibility to motivated users who wish to disseminate misinformation through multiple channels. Facebook’s architecture may therefore afford antivaccine content producers several means to circumvent the intent of misinformation removal policies.

BIO: David A. Broniatowski, Director of the Decision Making and Systems Architecture Laboratory, conducts research in decision making under risk, group decision making, the design and analysis of complex systems, and behavioral epidemiology. This research program draws upon a wide range of techniques including formal mathematical modeling, experimental design, automated text analysis and natural language processing, social and technical network analysis, and big data. His work on systematic distortions of public opinion about vaccines on social media by state-sponsored trolls has been widely reported in the academic and popular press.

David Rand

Massachusetts Institute of Technology

Professor of Management Science, Sloan School

TALK TITLE: Reducing misinformation sharing on social media using digital ads

ABSTRACT: Interventions to reduce misinformation sharing have been a major focus in recent years. Developing “content-neutral” interventions that do not require specific fact-checks or warnings related to individual false claims is particularly important in developing scalable solutions. Here, we provide the first evaluations of a content-neutral intervention to reduce misinformation sharing conducted at scale in the field. Specifically, across two on-platform randomized controlled trials, one on Meta’s Facebook (N=33,043,471) and the other on Twitter (N=75,763), we find that simple messages reminding people to think about accuracy —delivered to large numbers of users using digital advertisements— reduce misinformation sharing, with effect sizes on par with what is typically observed in digital advertising experiments. On Facebook, in the hour after receiving an accuracy prompt ad, we found a 2.6% reduction in the probability of being a misinformation sharer among users who had shared misinformation the week prior to the experiment. On Twitter, over more than a week of receiving 3 accuracy prompt ads per day, we similarly found a 3.7% to 6.3% decrease in the probability of sharing low-quality content among active users who shared misinformation pre-treatment. These findings suggest that content-neutral interventions that prompt users to consider accuracy have the potential to complement existing content-specific interventions in reducing the spread of misinformation online.

BIO: David Rand is the Erwin H. Schell Professor and a Professor of Management Science and Brain and Cognitive Sciences at MIT, an affiliate of the MIT Institute for Data, Systems, and Society, and the director of the Human Cooperation Laboratory and the Applied Cooperation Team. Bridging the fields of cognitive science, behavioral economics, and social psychology, David’s research combines behavioral experiments run online and in the field with mathematical and computational models to understand people’s attitudes, beliefs, and choices. His work uses a cognitive science perspective grounded in the tension between more intuitive versus deliberative modes of decision-making. He focuses on illuminating why people believe and share misinformation and “fake news,” understanding political psychology and polarization, and promoting human cooperation. David was named to Wired magazine’s Smart List 2012 of “50 people who will change the world,” chosen as a 2012 Pop!Tech Science Fellow, received the 2015 Arthur Greer Memorial Prize for Outstanding Scholarly Research, was selected as fact-checking researcher of the year in 2017 by the Poyner Institute’s International Fact-Checking Network, and received the 2020 FABBS Early Career Impact Award from the Society for Judgment and Decision Making. Papers he has coauthored have been awarded Best Paper of the Year in Experimental Economics, Social Cognition, and Political Methodology.

Sandra González-Bailón

Annenberg School, University of Pennsylvania

Carolyn Marvin Professor of Communication

TALK TITLE: Social and algorithmic choices in the transmission of information

ABSTRACT: The circulation of information requires prior exposure: we cannot disseminate what we do not see. In online platforms, information exposure results from a complex interaction between social and algorithmic forms of curation that shapes what people see and what they engage with. In this talk, I will discuss recent research investigating the social and technological factors that determine what information we encounter online. I will also discuss why it is important we adopt a systematic approach to mapping the information environment as a whole -- this approach is needed to capture aggregate characteristics that go unnoticed if we only focus on individuals. Networks, I will argue, offer measurement instruments that can help us map that landscape and identify pockets of problematic content as well as the types of audiences more likely to engage with it. Networks, I will argue, also help us compare (and differentiate) modes of exposure and the different layers that structure the current media environment.

BIO: Sandra González-Bailón is the Carolyn Marvin Professor of Communication at the Annenberg School for Communication, and Director of the Center for Information Networks and Democracy (cind.asc.upenn.edu). She also has a secondary appointment in the Department of Sociology at Penn. Prior to joining Penn, she was a Research Fellow at the Oxford Internet Institute (2008-2013). She completed her doctoral degree in Nuffield College (University of Oxford) and her undergraduate studies at the University of Barcelona. Her research agenda lies at the intersection of computational social science and political communication. Her applied research looks at how online networks shape exposure to information, with implications for how we think about political engagement, mobilization dynamics, information diffusion, and the consumption of news. Her articles have appeared in journals like PNAS, Science, Nature, Political Communication, the Journal of Communication, and Social Networks, among others. She is the author of the book Decoding the Social World (MIT Press, 2017) and co-editor of the Oxford Handbook of Networked Communication (OUP, 2020).

Andrew Guess

Princeton University

Assistant Professor of Politics and Public Affairs

TALK TITLE: Algorithmic recommendations and polarization on YouTube

ABSTRACT: An enormous body of work argues that opaque recommendation algorithms contribute to political polarization by promoting increasingly extreme content. We challenge this dominant view, drawing on three large-scale, multi-wave experiments with a combined 7,851 human users, consistently showing that extremizing algorithmic recommendations has limited effects on opinions. Our experiments employ a custom-built video platform with a naturalistic, YouTube-like interface that presents real videos and recommendations drawn from YouTube. We experimentally manipulate YouTube's actual recommendation algorithm to create ideologically balanced and slanted variations. Our design allows us to intervene in a cyclical feedback loop that has long confounded the study of algorithmic polarization---the complex interplay between algorithmic supply of recommendations and user demand for consumption---to examine the downstream effects of recommendation-consumption cycles on policy attitudes. We use over 125,000 experimentally manipulated recommendations and 26,000 platform interactions to estimate how recommendation algorithms alter users' media consumption decisions and, indirectly, their political attitudes. Our work builds on recent observational studies showing that algorithm-driven "rabbit holes" of recommendations may be less prevalent than previously thought. We provide new experimental evidence casting further doubt on widely circulating theories of algorithmic polarization, showing that even large (short-term) perturbations of real-world recommendation systems that substantially modify consumption patterns have limited causal effects on policy attitudes. Our methodology, which captures and modifies the output of real-world recommendation algorithms, offers a path forward for future investigations of black-box artificial intelligence systems. However, our findings also reveal practical limits to effect sizes that are feasibly detectable in academic experiments.

BIO: Andrew Guess is an assistant professor of politics and public affairs at Princeton University. He uses a combination of experimental methods, large datasets, machine learning, and innovative measurement to study how people choose, process, spread, and respond to information about politics. He is also a founding co-editor of the Journal of Quantitative Description: Digital Media, with Kevin Munger and Eszter Hargittai. You can read their essay introducing the journal’s philosophy and goals here.

Kate Starbird

University of Washington

Associate Professor at the Department of Human Centered Design & Engineering

TALK TITLE: Facts, frames, and (mis)interpretations: Understanding rumors as collective sensemaking

ABSTRACT: Polls show that people tend to agree that online misinformation is a critical problem. However, there is less agreement (even among researchers) about what exactly that problem consists of — or how we should address it. For example, we often see the problem described as an issue of "bad facts.” However, after studying online misinformation for over a decade, my team has concluded that people are more often misled not by faulty facts but by misinterpretations and mischaracterizations. Building from that observation, in this talk I'll present a framework for understanding the spread of misinformation — employed here as an umbrella term for several distinct, related phenomena — through the lens of collective sensemaking. Synthesizing prior theoretical work on rumoring, sensemaking and framing, I'll first describe collective sensemaking as a social process that takes place through interaction between evidence, frames, and interpretations. Next, drawing upon empirical case studies, I'll demonstrate how rumors are a natural byproduct of the sensemaking process, how disinformation campaigns attempt to manipulate that process, and how conspiracy theorizing is a type of patterned or corrupted sensemaking. I'll conclude with reflections about how this perspective could inform more nuanced understandings, new ways to operationalize and study these phenomena, and alternative approaches for addressing them.

BIO: Kate Starbird is an Associate Professor at the Department of Human Centered Design & Engineering (HCDE) at the University of Washington (UW). Dr. Starbird’s research sits at the intersection of human-computer interaction and the field of crisis informatics — i.e. the study of how social media and other information-communication technologies are used during crisis events. Currently, her work focuses on the production and spread of online rumors, misinformation, and disinformation during crises, including natural disasters and political disruptions. In particular, she investigates the participatory nature of online disinformation campaigns, exploring both top-down and bottom-up dynamics. Dr. Starbird received her BS in Computer Science from Stanford (1997) and her PhD in Technology, Media, and Society from the University of Colorado (2012). She is a co-founder and currently serves as director of the UW Center for an Informed Public.

Sinan Aral

Massachusetts Institute of Technology

David Austin Professor of Management, IT, Marketing and Data Science

TALK TITLE: A Causal Test of the Strength of Weak Ties

ABSTRACT: I'll present the results of multiple large-scale randomized experiments on LinkedIn’s People You May Know algorithm, which recommends new connections to LinkedIn members, to test the extent to which weak ties increased job mobility in the world’s largest professional social network. The experiments randomly varied the prevalence of weak ties in the networks of over 20 million people over a 5-year period, during which 2 billion new ties and 600,000 new jobs were created. The results provided experimental causal evidence supporting the strength of weak ties and suggested three revisions to the theory. First, the strength of weak ties was nonlinear. Statistical analysis found an inverted U-shaped relationship between tie strength and job transmission such that weaker ties increased job transmission but only to a point, after which there were diminishing marginal returns to tie weakness. Second, weak ties measured by interaction intensity and the number of mutual connections displayed varying effects. Moderately weak ties (measured by mutual connections) and the weakest ties (measured by interaction intensity) created the most job mobility. Third, the strength of weak ties varied by industry. Whereas weak ties increased job mobility in more digital industries, strong ties increased job mobility in less digital industries.

BIO: Sinan Aral is the David Austin Professor of Management, IT, Marketing and Data Science at MIT, Director of the MIT Initiative on the Digital Economy (IDE) and a founding partner at Manifest Capital. He is currently on the Advisory Boards of the Alan Turing Institute, the British National Institute for Data Science in London, the Centre for Responsible Media Technology and Innovation in Bergen, Norway and C6 Bank, one of the first all-digital banks of Brazil. Sinan’s research and teaching have won numerous awards including the Microsoft Faculty Fellowship, the PopTech Science Fellowship, an NSF CAREER Award, a Fulbright Scholarship, the Jamieson Award for Teaching Excellence (MIT Sloan’s highest teaching honor) and more than ten best paper awards conferred by his colleagues in research. In 2014, he was named one of the “World’s Top 40 Business School Professors Under 40” and, in 2018, became the youngest ever recipient of the Herbert Simon Award of Rajk László College in Budapest, Hungary. In 2018, Sinan published what Altmetrics called the second most influential scientific publication of the year on the “Spread of False News Online,” on the cover of Science. He is also the author of the upcoming book The Hype Machine, about how social media is disrupting our elections, our economies and our lives.